Slurm advanced guide

Job array

Job arrays offer a mechanism for submitting and managing collections of similar jobs quickly and easily; job arrays with millions of tasks can be submitted in milliseconds (subject to configured size limits). All jobs must have the same initial options (e.g. size, time limit, etc.), however it is possible to change some of these options after the job has begun execution using the scontrol command specifying the JobID of the array or individual ArrayJobID.

Full documentation here

Possibility:

#SBATCH --array=1,2,4,8

#SBATCH --array=0,100:5 # equivalent to 5,10,15,20...

#SBATCH --array=1-50000%200 # 200 jobs max at the time

Example 1: Deal with a lot of files

#!/bin/bash

#SBATCH --array=0-29 # 30 jobs for 30 fastq files

INPUTS=(../fastqc/*.fq.gz)

srun fastqc ${INPUTS[$SLURM_ARRAY_TASK_ID]}

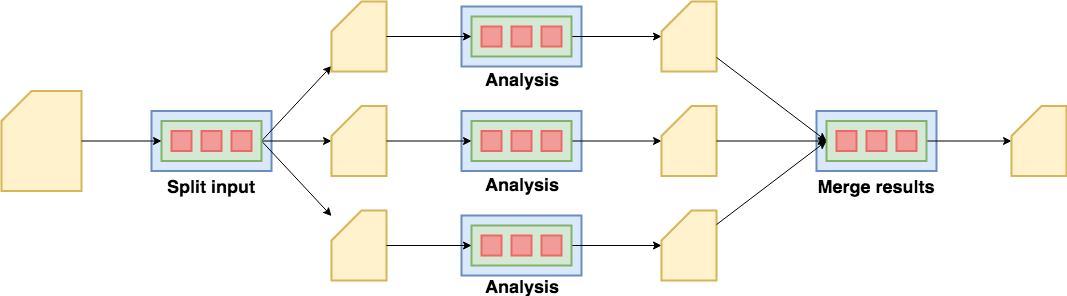

- The process is represented in the figure below:

Example 2: Deal with a lot of parameters

#!/bin/bash

#SBATCH --array=0-4 # 5 jobs for 5 different parameters

PARAMS=(1 3 5 7 9)

srun a_software ${PARAMS[$SLURM_ARRAY_TASK_ID]} a_file.ext

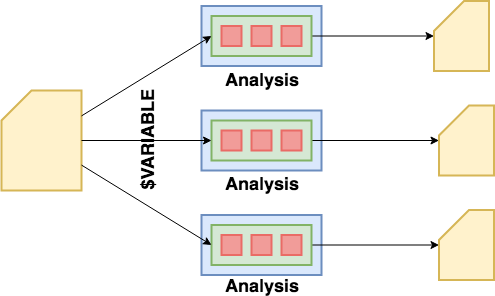

- The process is represented in the figure below:

Job dependency

If you want slurm to wait that a job is finish before launching another, you can use the sbatch flag --dependency

For example, there is a dependency between the fastqc result and multiqc

fastqc.sh

#!/bin/bash

#SBATCH --array=0-29 # 30 jobs

INPUTS=(../fastqc/*.fq.gz)

fastqc ${INPUTS[$SLURM_ARRAY_TASK_ID]}

$ sbatch fastqc.sh

Submitted batch job 3161045

multiqc.sh

#!/bin/bash

multiqc .

sbatch --dependency=afterok:3161045 multiqc.sh

ntasks

In a job, you can lauch multiple task. Each task will be lauch in parrallel with the ressource that you had specify. This is done by the following slurm parameters.

--ntasks/-n: number of tasks (default is 1)

If we want to use the srun command :

$ srun hostname

cpu-node-1

$ srun -n 2 hostname

cpu-node-1

cpu-node-1

$ srun --nodes 2 -n 2 hostname

cpu-node-1

cpu-node-2

In this exemple, when the --ntasks slurm lauch two srun task in parrallel.

If we want to use the sbatch command :

test.sbatch :

#!/bin/bash

#SBATCH --ntasks=3

#SBATCH --nodes=3

srun hostname

If we run sbatch test.sbatch, we will optain something like :

cpu-node130

cpu-node131

cpu-node132

In this exemple, when the --ntasks slurm lauch three srun task in parrallel.

MPI

In order to use MPI, you must request a number of tasks with --ntasks. If you use openmpi, see --ntasks as the number of slots available.

The command mpirun will automaticly use all the slots available

The most simple way to lauch mpi jobs in slurm would be with salloc.

For exemple with openmpi :

$salloc -n 2 mpirun hostname

salloc: Granted job allocation 5230859

cpu-node132

cpu-node133

salloc: Relinquishing job allocation 5230859

salloc: Job allocation 5230859 has been revoked

Lauch 2 task in parrallel on 2 nodes.

If you want to use MPI in sbatch script :

mpi.sbatch :

#!/bin/bash

#SBATCH --ntasks=3

#SBATCH --nodes=3

mpirun hostname

If we run sbatch mpi.sbatch, we will optain something like :

cpu-node132

cpu-node133

cpu-node134